Releasing builds fast keeps customers happy — but only if speed doesn’t wreck quality. A quick win for stakeholders can turn into a nightmare when a bug or security hole slips through. As projects scale, each release touches more systems, more people, and more dependencies, and that balancing act gets harder. A speed–quality tightrope, if you will. Rushed testing, missed issues, and painful rollbacks can quickly erode trust in the process. Luckily, the continuous delivery pipeline is here to help.

Slowing down only makes things harder — longer gaps between releases just mean more code to sift through when something breaks, after all. Continuous delivery keeps speed and quality working together. With a steady, automated flow and guardrails at every step, each release is a low-risk step forward, not a leap into the unknown. A rare case of speed and safety playing on the same team!

What is the continuous delivery pipeline?

Think of a continuous delivery pipeline as a production line for software. Every change to the code moves through a set of automated checkpoints — each one asking the same three questions:

- Does it fit smoothly with what’s already there?

- Does it meet the standards we’ve agreed on?

- Will it go live without breaking the user experience?

The format can be simple or sprawling, but the principle stays the same: break the release into small, repeatable checks that run every time code is committed. It’s rooted in Agile software development thinking — short cycles, constant feedback, working software over manual grind.

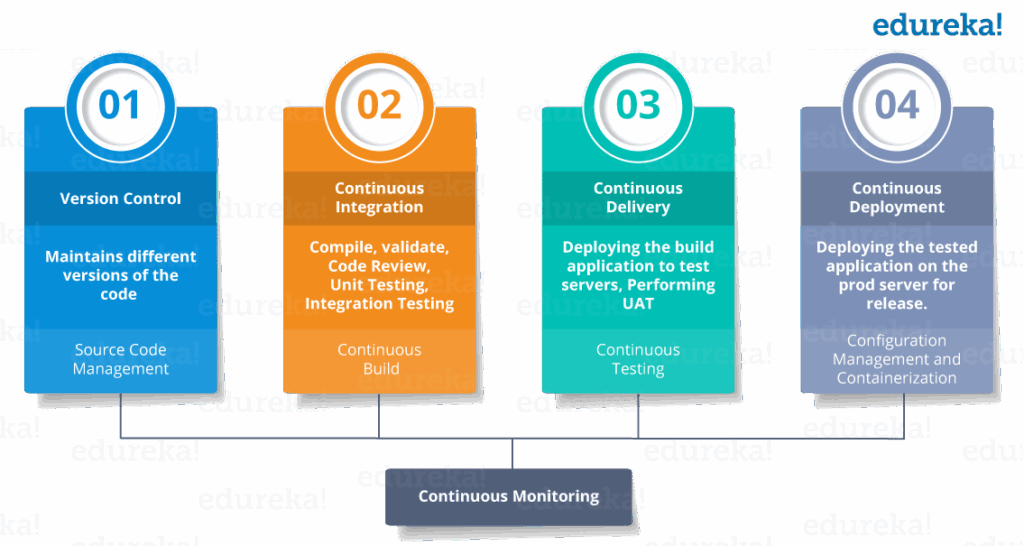

In practice, the pipeline sits inside a bigger CI/CD setup:

- Continuous integration: merge and test code often.

- Continuous delivery: keep tested code ready to deploy anytime.

- Continuous deployment: take the final leap and push it live automatically.

With predefined rules and scripts, the pipeline cuts the guesswork. A failed test stops the release in its tracks, alerts the right people, and logs the details for later. The result is faster delivery with a consistent, no-shortcuts process — no matter who wrote the code or when it landed.

A continuous delivery pipeline is the framework that turns code changes into production-ready software through a sequence of automated steps. Each step is designed to confirm three things – that the new code:

- Integrates smoothly

- Meets agreed standards, and

- can be deployed without disrupting the user experience.

What are the benefits of the continuous delivery pipeline?

A strong delivery pipeline doesn’t just speed things up — it changes the whole atmosphere. Releases stop feeling like a mad dash and start feeling steady, predictable, almost routine.

With the grunt work handled automatically, no one’s double-checking scripts or fiddling with configs at the last minute.

Predictability counts every bit as much as speed or quality. When you can trust that each release will walk the same path and land in the same place, you can plan without the stomach knots. It’s the gap between lobbing code into the dark and sending it down a lit, signposted road you’ve travelled a hundred times before.

And because the steps run the same way every time, quality isn’t a scramble at the end — it’s built into the flow, with tests quietly flagging issues before they ever become an end-of-day (or worse, 2 AM) crisis.

It’s not just a speed thing; it’s about reducing the blast radius when something goes wrong. A tiny change is quick to diagnose and fix, while a huge bundle of updates can hide a single line of code that quietly breaks everything.

Meanwhile, confidence builds. Developers know that if their code clears the pipeline, it’s solid enough to go live. New team members don’t need weeks of handholding to learn how to ship — they can plug into the process and focus on writing good code. And because the pipeline keeps a record of what happened and when, everyone has a clearer view of progress, setbacks, and where to improve next time.

Some of the biggest wins teams notice are:

- Fewer mistakes thanks to consistent, automated steps.

- Faster fixes because problems are caught early and in smaller chunks of work.

- Less stress — launches feel routine instead of risky.

- Better visibility into what’s working and what needs attention.

The 4 phases of the continuous delivery pipeline

No two pipelines are identical, but most follow a similar rhythm. The idea is to move changes forward in small, safe steps — each one tested and approved before it goes any further. Here’s how it typically unfolds.

1. Building and testing the pieces

It begins small — a single service, a shared library, maybe just a feature branch. The code gets a once-over from another developer, lands in the shared repo, and kicks off a set of lean, targeted tests. They’re quick by design, built to catch problems before they grow teeth.

2. Assembling subsystems

Next, components come together into deployable subsystems. These are bigger building blocks — like a backend API, a database service, or a UI layer — that can run on their own. They’re tested in an environment that mimics production as closely as possible.

The checks here go deeper:

- Functional tests to make sure features do what they’re supposed to.

- Regression tests to confirm nothing old has broken.

- Performance and security checks to keep the system stable and safe.

3. Moving to production

Once a subsystem — or the whole system — clears its checks, it’s ready for production. Some teams let changes go live automatically; others prefer a manual go-ahead, especially for releases targeting a specific user group before a full rollout. And if there’s ever doubt? The pipeline can freeze the release until the issue’s sorted.

One popular safeguard here is “zero downtime deployment” — a way of rolling out changes without anyone noticing a flicker in service. A common flavour is “blue-green deployment”: you run the old version (“blue”) and the new version (“green”) side by side, send a small slice of users to green first, and only switch everyone over once you’re sure it’s solid. If something’s off, you simply route people back to blue, and most will never know a thing happened.

4. Running in parallel

For simple products, stages are run consecutively. In more complex setups, multiple pipelines work in parallel, merging near the end. It’s faster, but it demands tight coordination — especially when manual checks are still part of the process.

Breaking work into clear phases keeps releases predictable, makes issues easier to trace, and avoids the stress of “big bang” launches. The pipeline shifts from being a hurdle to a steady rhythm everyone works to.

What does “shift left” mean?

Even the slickest pipeline will tank if security and quality checks are tacked on at the end. Shift them upstream. Catch bugs, vulnerabilities, and weak spots while the code’s still warm in a developer’s mind, not weeks later when the trail’s gone cold.

In DevOps circles, you’ll sometimes hear this called “shifting left” — pulling quality and security checks as close to the start of development as you can. Pair that with “DevSecOps” thinking, which treats security not as a late-stage hurdle but as something baked into the recipe from day one. These aren’t just pointless buzzwords; they’re a reminder that prevention beats cure, especially when fixing a problem later means combing through weeks of changes.

That might mean running static analysis alongside unit tests, scanning third-party libraries for known flaws, or setting performance benchmarks that each component has to hit before moving forward.

Other traps to avoid

Bundling every subsystem into one mega-release, only to have a single delay stall the whole lot. Or drowning the process in manual sign-offs that slow things down without adding much safety — especially if the people signing off don’t have the full technical picture. The sweet spot is letting automation handle the routine checks, and saving human input for the calls that actually need it.

It also helps if the pipeline can nudge people automatically — sending alerts, surfacing feedback, and showing clear progress charts so everyone can see how their work fits into the whole. It’s a small thing that makes the whole process sharper and more open.

How to keep the pipeline (and your team) in sync

A good delivery pipeline is like muscle memory — the more you use it, the smoother every move feels. You’re not stopping to think about which script to run or who needs to sign off; the rhythm just happens. But that rhythm only holds if every part of the process is talking to the others.

The last — and maybe most overlooked — step in building a delivery pipeline is picking a project management platform that actually ties the whole thing together. Not necessarily the flashiest tool, but the one that plays nicely with your source control (GitHub, Subversion) and has enough plug-ins to bend to your workflow instead of the other way round.

Backlog was made for software delivery

Backlog, our own platform, keeps the moving parts visible, the feedback loops tight, and the whole team on the same page — whether they’re committing code, running tests, or watching the release go live.

Instead of chasing status updates or wondering where something’s stuck, you can see it all in one place and act before small issues turn into release blockers.

The pipeline will take care of the code; Backlog takes care of the people running it. And when both are in sync, shipping fast and safe stops feeling like a goal — and starts feeling like your default.

Want to learn more about the full software development lifecycle? Make sure to check out our DevOps Guide!

This post was originally published on July 2, 2020, and updated most recently on October 1, 2025.